When working with OpenAI’s API, one of the most critical aspects to understand is the context window. The context window determines how much text the model can process at a time, including both the input (prompt) and the output (generated response). Optimizing your use of the context window can significantly enhance the effectiveness of AI-powered applications, from chatbots to document summarization tools.

1. What is a Context Window?

The context window refers to the total number of tokens that a model can consider during a single request. It includes:

- Input tokens: The tokens in the prompt or message history.

- Output tokens: The tokens generated by the model in response.

For example, if a model has a context window of 128,000 tokens, this means that the sum of input and output tokens must not exceed this limit.

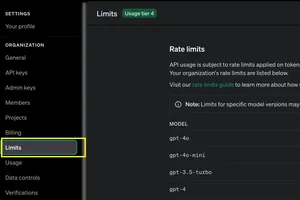

2. Context Window for Different OpenAI Models

Different OpenAI models have different context window limits. Here’s how they compare:

| Model | Maximum Context Window (Tokens) |

|---|---|

| GPT-4o | 128,000 tokens |

| GPT-4 | 32,000 tokens |

| GPT-3.5-turbo | 16,000 tokens |

Understanding these limits is crucial when designing prompts or implementing AI applications, ensuring you don’t exceed the model’s token capacity.

3. Why is the Context Window Important?

a. Managing Long Conversations

For applications like AI-powered chatbots, maintaining conversation history within the context window is essential. If the conversation exceeds the model’s token limit, earlier messages may be truncated, potentially causing loss of context.

b. Processing Large Documents

If you’re using OpenAI’s API for document summarization or analysis, the model can only consider a limited portion of the document within a single request. Splitting documents into chunks that fit within the context window is a best practice.

c. Balancing Input and Output Length

Since both input and output count toward the total token limit, a very long prompt may leave insufficient room for the model’s response. It’s important to allocate space effectively.

4. Optimizing Context Window Usage

a. Trimming Unnecessary Text

Avoid redundant or irrelevant details in your prompts. Keep instructions concise to maximize response length.

b. Using Summarization Techniques

Instead of feeding lengthy chat histories, summarize previous interactions to preserve context while minimizing token usage.

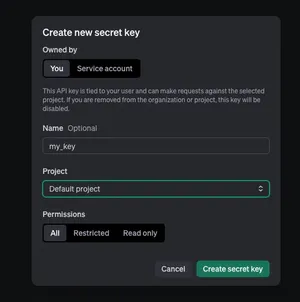

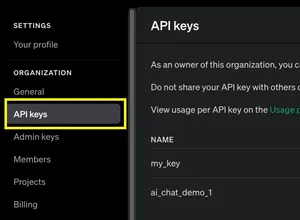

c. Implementing Token Management

Use OpenAI’s token counting tools to estimate and manage token usage before making API calls.

import tiktoken

def count_tokens(text, model="gpt-4"):

enc = tiktoken.encoding_for_model(model)

return len(enc.encode(text))

text = "Understanding OpenAI API context windows is crucial for developers."

print("Token count:", count_tokens(text))d. Adjusting max_tokens Parameter

When making API requests, set max_tokens to prevent the model from generating excessively long responses that might exceed token limits.

import openai

import asyncio

async def generate_response(prompt):

client = openai.AsyncOpenAI(api_key="your-api-key")

response = await client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": prompt}],

max_tokens=200 # Limit response length

)

return response.choices[0].message.content

prompt = "Explain how the OpenAI API context window works."

print(asyncio.run(generate_response(prompt)))5. Challenges When Exceeding the Context Window

If your request exceeds the model’s token limit, OpenAI will truncate earlier parts of the input, which may cause:

- Loss of context in conversations.

- Incomplete or inaccurate responses.

- Unexpected truncation of responses.

To prevent this, always monitor token usage, summarize where necessary, and limit unnecessary input.

6. Conclusion

The context window is a fundamental concept in using OpenAI’s API effectively. Understanding its limitations and best practices for managing it ensures better AI-generated responses, improved performance, and cost efficiency. By keeping your prompts concise, managing conversation histories wisely, and setting appropriate token limits, you can optimize your use of OpenAI’s models to build powerful AI-driven applications.