Text embeddings are a powerful way to convert text into numerical representations that capture semantic meaning. OpenAI provides a robust API for generating embeddings, which can be used for various applications such as search, clustering, recommendation systems, and natural language processing (NLP) tasks.

In this guide, we’ll explore how to generate text embeddings using the OpenAI API with Python asynchronously and discuss some practical applications.

Prerequisites

Before you begin, make sure you have:

- Python 3.8 or later installed on your system.

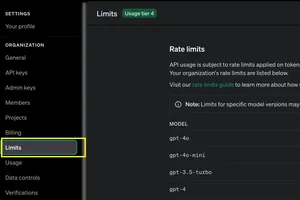

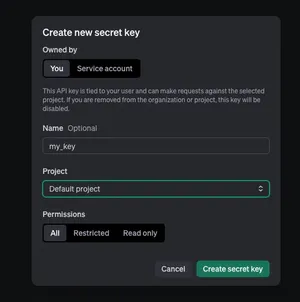

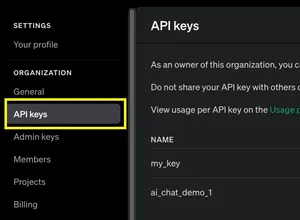

- An OpenAI API key. If you don't know how to get one, check this article first: How to create a new OpenAI API key

- The OpenAI Python library installed. You can install or upgrade it with:

pip install --upgrade openai

Understanding Text Embeddings

Text embeddings are numerical vector representations of text that preserve semantic meaning. They enable efficient comparison of texts based on their meanings rather than just keyword matching. OpenAI’s embedding models, like text-embedding-ada-002, generate high-quality embeddings for a wide range of text processing tasks.

Generating Embeddings with OpenAI API

Let’s start with a basic example to generate embeddings for a given text using async/await.

Basic Example: Generating a Single Text Embedding

import asyncio

import openai

# Use your actual OpenAI API key here

# You can directly set it like this: OPENAI_API_KEY = "your key here"

# Or you can use an environment variable like this: OPENAI_API_KEY = os.environ.get("OPENAI_API_KEY")

from config import OPENAI_API_KEY

async def get_embedding(text):

client = openai.AsyncOpenAI(api_key=OPENAI_API_KEY)

response = await client.embeddings.create(

model="text-embedding-ada-002",

input=text

)

return response.data[0].embedding

async def main():

text = "Welcome to KhueApps.com"

embedding = await get_embedding(text)

print(f"Embedding vector (truncated): {embedding[:5]}...")

# Execute the main function

asyncio.run(main())Output:

Embedding vector (truncated): [0.0113869915, 0.015618371, 0.012323555, -0.046140898, -0.011494798]...

Embedding vector (truncated): [0.0113869915, 0.015618371, 0.012323555, -0.046140898, -0.011494798]...Explanation:

- The function

get_embeddinginitializes anAsyncOpenAIclient and sends an async request to OpenAI. - It requests embeddings from the

text-embedding-ada-002model. - The response contains a list of embeddings, where

response.data[0].embeddingprovides the vector representation. - The

mainfunction callsget_embeddingand prints a truncated version of the embedding.

Handling Multiple Texts Efficiently

Often, you’ll need embeddings for multiple texts at once. Instead of making multiple API calls sequentially, you can process them concurrently.

import asyncio

import openai

# Use your actual OpenAI API key here

# You can directly set it like this: OPENAI_API_KEY = "your key here"

# Or you can use an environment variable like this: OPENAI_API_KEY = os.environ.get("OPENAI_API_KEY")

from config import OPENAI_API_KEY

async def get_embeddings(texts):

client = openai.AsyncOpenAI(api_key=OPENAI_API_KEY)

response = await client.embeddings.create(

model="text-embedding-ada-002",

input=texts

)

return [item.embedding for item in response.data]

async def main():

texts = [

"OpenAI’s API is great for text processing.",

"Embeddings help capture semantic meaning in text.",

"Machine learning models benefit from embeddings."

]

embeddings = await get_embeddings(texts)

for i, emb in enumerate(embeddings):

print(f"Embedding {i+1} (truncated): {emb[:5]}...")

# Execute the main function

asyncio.run(main())Output:

Embedding 1 (truncated): [-0.0076874406, 0.003729384, -0.0007284741, -0.010027974, 0.012852754]...

Embedding 2 (truncated): [-0.033824936, 0.006223918, 0.017638214, -0.009447655, 0.005206578]...

Embedding 3 (truncated): [-0.03423901, 0.0039687324, 0.018734802, -0.026347883, 0.0061202557]...

Embedding 1 (truncated): [-0.0076874406, 0.003729384, -0.0007284741, -0.010027974, 0.012852754]...

Embedding 2 (truncated): [-0.033824936, 0.006223918, 0.017638214, -0.009447655, 0.005206578]...

Embedding 3 (truncated): [-0.03423901, 0.0039687324, 0.018734802, -0.026347883, 0.0061202557]...Explanation:

- This example uses the asynchronous

AsyncOpenAIclient to handle multiple requests concurrently. - The

get_embeddingsfunction fetches embeddings for multiple texts in a single request, reducing overhead. - The

mainfunction callsget_embeddingsand prints truncated embedding vectors.

Use Cases for Text Embeddings

1. Text Similarity Search

Embeddings allow you to measure the similarity between texts using cosine similarity. This is useful for document search, recommendation systems, and clustering.

2. Semantic Search

Instead of relying on keyword matching, embeddings help find documents related in meaning, even if they don’t contain the same words.

3. Clustering and Classification

Embeddings enable grouping similar texts together, improving NLP classification tasks like sentiment analysis and topic modeling.

4. Anomaly Detection

By analyzing embedding vectors, you can detect outliers in a dataset, useful for fraud detection or unusual behavior identification.

Best Practices

- Batch Requests: If you need embeddings for many texts, group them into batches to minimize API calls and improve efficiency.

- Normalize Embeddings: Many applications benefit from normalizing embeddings to unit vectors before performing similarity comparisons.

- Store and Reuse Embeddings: Save generated embeddings in a database to avoid redundant API calls and reduce costs.

Conclusion

OpenAI’s text embedding API provides an efficient way to convert text into meaningful numerical representations. Whether you’re building a search engine, recommendation system, or NLP model, embeddings help enhance text understanding and processing. By leveraging asynchronous calls and batch processing, you can optimize performance and cost-efficiency.

Now that you have the basics, try integrating text embeddings into your next project!